Category work

Enhance your academic content’s credibility and make navigation of your articles easier on WordPress with these essential plugins. Source: Meet Academic Standards With These Essential WordPress Plugins For Scholarly Content Read the original story

Goal: better, more focused search for www.cali.org. In general the plan is to scrape the site to a vector database, enable embeddings of the vector db in Llama 2, provide API endpoints to search/find things. Hints and pointers. Llama2-webui –… Continue Reading →

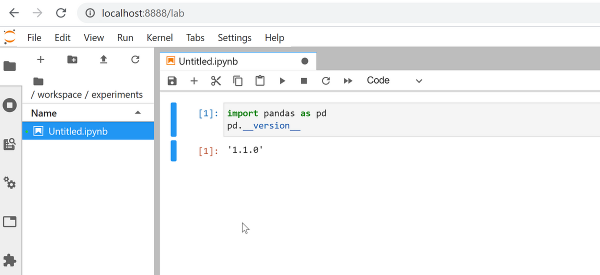

Here’s a great quick start guide to getting Jupyter Notebook and Lab up and running with the Miniconda environment in WSL2 running Ubuntu. When you’re finished walking through the steps you’ll have a great data science space up and running… Continue Reading →

In the world of data, textual data stands out as being particularly complex. It doesn’t fall into neat rows and columns like numerical data does. As a side project, I’m in the process of developing my own personal AI assistant…. Continue Reading →

The longer holiday weekend edition. Opportunities and Risks of LLMs for Scalable Deliberation with Polis — Polis is a platform that leverages machine intelligence to scale up deliberative processes. In this paper, we explore the opportunities and risks associated with… Continue Reading →

What I’m reading today. How Unstructured and LlamaIndex can help bring the power of LLM’s to your own data All You Need to Know to Build Your First LLM App — A Step-by-Step Tutorial to Document Loaders, Embeddings, Vector Stores… Continue Reading →

Large language models (LLM) are notoriously huge and expensive to work with. An LLM requires a lot of specialized hardware to train and manipulate. We’ve seen efforts to transform and quantize the models that result in smaller footprints and models… Continue Reading →

One can use this notebook to build a pipeline to parse and extract data from OCRed PDF files. Warning: When using LLMs for entity extraction, be sure to perform extensive quality control. They are very susceptible to distracting language (latching… Continue Reading →